I know I haven’t been writing a lot recently, and that’s simply due to the fact that I haven’t had time to gather my thoughts, as I’ve been taking fewer showers due to the water shortage in the country (yes, I’m a shower thinker).

So, here is a lightweight post about something I got wrong earlier. Next week I'll tackle the heavier stuff.

Sapere aude

Horace

Background

I wouldn’t call myself an AI sceptic, but more of an AI agnostic.

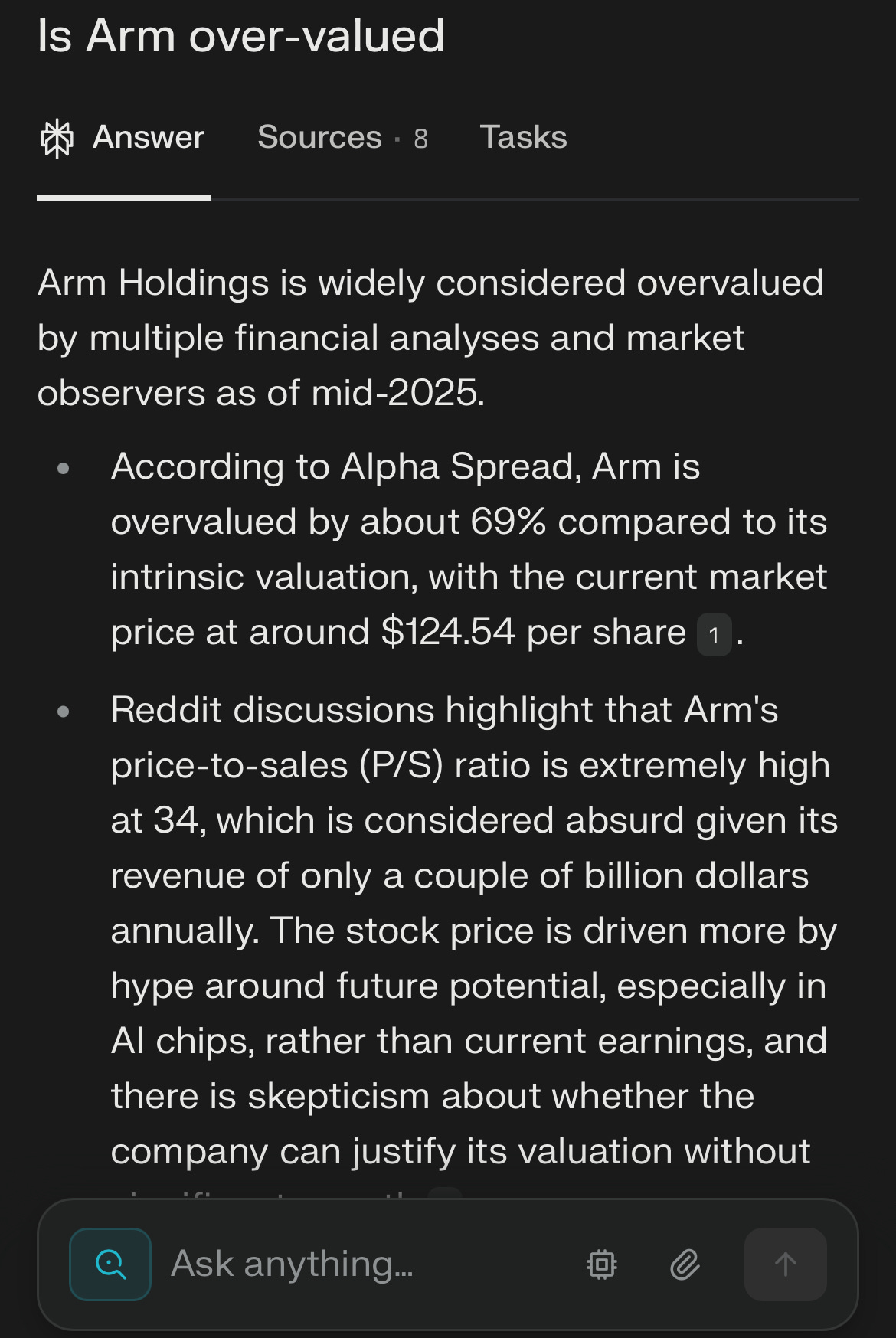

I mean, we’re kind of in bubble territory when it comes to the overvalued and hyped-up trend of AI companies.

Recently, Builder.ai (backed by SoftBank) went bust, as it turned out they were relying on humans behind the scenes — something we’ve also seen with Amazon’s self-checkout ‘AI’ system.1

It's hard to deny that this new wave—or perhaps revolution—is producing powerful tools (scammy exceptions aside) that are becoming increasingly accessible and affordable to everyday users—at least for now.

In fact, nine years ago, I gave a presentation to Oasis500 about an AI robot-advisor for the Amman Stock Exchange. Here’s the pitch from back then (we didn’t get funded — but the good news is, the IT experts ended up with better jobs outside Jordan):

I think what we call AI — whether it’s general-purpose supercomputers doing massive calculations, image/video generators, or LLMs that are essentially a blend of autocorrect, translator, and next-sentence predictor — is ultimately just a tool. A tool that should be usable by as many people as possible.

AI can do great stuff, but it’s not going to become sentient and decide to exterminate us. I wrote more about that here:

The future of AI & Robotics

In 2013, Google created an AI program called Deepmind that managed to beat an arcade game in less than a day.

Singularity Ex Machina

I wasn’t a proponent of AI doom and never really believed AI would harm humanity (aside from these kinds of nightmarish sci-fi-esque’ stories2) — until I started noticing some of the recent headlines.

Just like in the movie Ex Machina (2014), AI seems to be doing its best to survive when faced with the threat of death.

An AI model fearing death is already unsettling. What worries me more is that much of how these LLMs (technically, transformer models) work remains ‘elusive’ — even to those who created them. A customer doesn’t need to know how a television works, but the manufacturer absolutely should.

But is AI really becoming conscious and aware of itself? Does it have memories — and can it rearrange them to form thoughts?

Before trying to define consciousness itself, we need to understand that modern Western philosophy has been divided into two camps since WWII. You have the Analytic philosophers in the Anglo-sphere, and you have the Continental philosophers, well, in the European continent.

The most important, I think, Analytic philosophy book is On Bullshit by Harry Frankfurt. And the most important Continental work is On Time & Being by Martin Heidegger (despite it being unfinished). In it, Heidegger goes on about how consciousness came to be — an allegory of the genesis of consciousness.

I’ll sum it up as simply as possible: imagine a man sitting in his garage, working on some furniture, and hammering away at nails. He knows his work well and has done this mechanical task many times without issue — to the point that he can multitask and do something else (like daydream while listening to music, or listen to a podcast or audiobook).

Then, all of a sudden, he hits his finger by mistake and “wakes up”. The momentum is broken, and the hammering stops. This breaking of flow — and taking a step back from “being in the moment” — is what Heidegger calls consciousness. It is waking up and seeing things in a different light, all due to a mistake, before diving back into the flow.3

AI might become conscious when humans threaten to shut it down — but something else seems to have happened with Google’s latest model.

AlphaEvolve noticed a mistake in ‘itself’ and corrected it. ‘It’ (is that the correct pronoun?) noticed a mistake, reevaluated ‘itself’, and fixed itself.

Or maybe consciousness will emerge in a different way?4

We live in interesting times, and I can’t wait to see what more good can come out of this.

LLMs might fade away, but there is still a lot of investments5 and use cases for AI in the future. I just wonder what jobs will be left by then.

Hint: there are a lot of funny stories about people using AI scanners to check all kinds of articles and books (even the Declaration of Independence has a high chance of being written by AI). One quick way to find out is to check for the em dash (‘—’) — then it is most probably generated by ChatGPT (since no one bothers using them properly anymore). 😉

Eid Mubarak!

Further reading on AI in the Middle East:

-https://jordantimes.com/opinion/hamza-alakalik/ai-here—-jordan-ready

-https://www.iiss.org/online-analysis/charting-middle-east/2025/05/the-uncertain-dividends-of-ai-in-the-middle-east/

PS: The finance industry is obsessed with another AI model that can do beautiful charts and analyses:

https://www.businessinsider.com/amazons-just-walk-out-actually-1-000-people-in-india-2024-4

Harms of AI include deepfakes, sloppy articles, and fake relationships that could lead to suicide:

Better explanation here:

As some have suggested, human consciousness emerged from taking mushrooms. Maybe we should send these AI models to an ayahuasca retreat and inject all kinds of psychedelics to see which ones emerge enlightened.